In 2025, artificial intelligence has transcended its traditional role of assisting in code generation to become a comprehensive, full-stack software engineering partner. This evolution is marked by the emergence of agentic AI systems capable of autonomously handling tasks ranging from design and development to deployment and optimization. As these systems mature, the role of human developers is shifting significantly—from hands-on coding to strategic oversight, system architecture, and business alignment.

From Code Completion to Autonomous Software Engineering

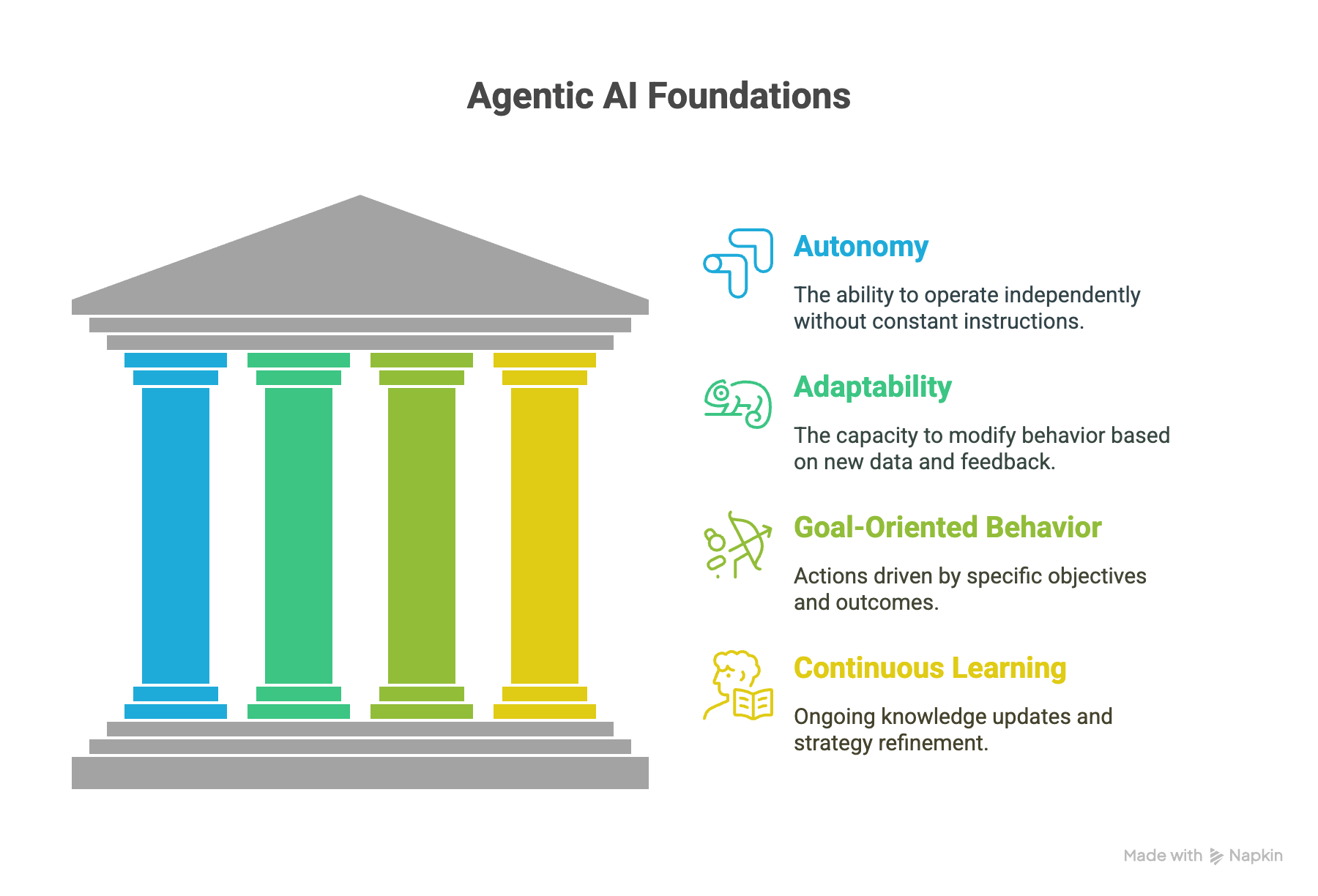

The landscape of artificial intelligence (AI) is undergoing a profound transformation, with the rise of agentic AI marking a significant leap beyond traditional generative and predictive systems. Unlike earlier models that required explicit human input or operated within narrow boundaries, agentic AI is designed to act autonomously, make decisions, and execute complex tasks across the entire software development lifecycle. These systems exhibit core traits such as autonomy, goal-oriented behavior, adaptability, and persistent learning—allowing them to navigate dynamic environments, reason through challenges, and continuously refine their outputs based on feedback.

The journey from basic code autocompletion tools to sophisticated agentic AI platforms has been both rapid and revolutionary. Today’s AI agents are no longer confined to assisting with syntax or code snippets; they are actively involved in system architecture design, managing multi-service integrations, deploying production pipelines, and optimizing software performance in real time. This evolution effectively positions them as autonomous engineering assistants, capable of contributing meaningfully across all layers of software development—from frontend prototypes to backend orchestration and infrastructure.

More than just enhancing productivity, this shift has far-reaching implications for the future of work and organizational structure. By offloading substantial technical responsibilities to agentic systems, individuals and teams can scale operations with unprecedented efficiency. We are entering an era where the concept of the “one-person unicorn”—a solo founder augmented by a constellation of intelligent agents—is not only plausible but increasingly within reach. In this new paradigm, developers transition from implementers to orchestrators, focusing on high-level architecture, strategic alignment with business goals, and ethical governance, while AI handles much of the execution and iteration beneath the surface.

Agentic AI: Core Principles and Architectural Foundations

The power and promise of agentic AI stem not only from the sophistication of the underlying models, but also from a modular architecture that promotes autonomy, adaptability, and long-term learning. Unlike traditional monolithic AI applications, agentic systems are architected as composable units—capable of perceiving their environment, making decisions, and acting toward defined goals without granular, human-driven control at every step.

Key Foundations of Agentic Intelligence

Autonomy

Autonomy is the cornerstone of agentic systems. An autonomous agent can independently assess situations, formulate decisions, and take actions without relying on explicit human instructions at every turn. This goes beyond task automation—these agents can dynamically prioritize, plan, and execute within unpredictable or evolving contexts, such as managing CI/CD pipelines, triaging incidents, or refactoring legacy codebases in production environments.

Adaptability

Adaptability enables agentic systems to respond intelligently to changing environments. Rather than operating on fixed rule sets, agents use reinforcement learning, contextual embeddings, and dynamic fine-tuning to adjust their behavior based on new inputs and outcomes. Whether it’s adjusting system design based on user feedback or recalibrating backend logic in response to infrastructure changes, adaptable agents evolve continuously to maintain relevance and precision.

Goal-Oriented Behavior

At the heart of every effective agent is a clear, structured objective. Goal-orientation ensures that agent actions are not isolated or reactive—they are aligned with specific, measurable outcomes. In advanced implementations, agents can pursue layered and hierarchical goals, balancing short-term sub-tasks (like API integration) with broader missions (such as delivering a feature end-to-end or maintaining system uptime).

Continuous Learning

Traditional AI often operates in static cycles—train once, deploy, and retrain later. Agentic systems break from this pattern. They are designed for in-situ learning, meaning they ingest new data, gather contextual feedback, and optimize their behavior in real time. This capability is especially critical in software development environments, where systems, codebases, and user expectations evolve constantly. Continuous learning ensures agents remain effective and contextually aware, even as their ecosystem shifts.

Key Developments in Agentic AI

Model Context Protocol (MCP): Adopted by major players like OpenAI and Google DeepMind, MCP has become a standard for integrating AI agents with enterprise systems. It enables AI models to securely access and interact with tools like GitHub, Slack, and internal databases, facilitating context-aware and autonomous operations.

Google A2A Protocol (Agent-to-Agent): Introduced at Google I/O 2025, the A2A Protocol enables seamless communication and task delegation between autonomous agents. This advancement marks a significant shift toward collaborative multi-agent systems where AI agents can dynamically divide responsibilities, share context, and complete complex workflows without human micromanagement. By standardizing interactions across agents with different capabilities, A2A paves the way for decentralized, scalable software engineering orchestrated entirely by AI entities.

Claude 4 by Anthropic: Released in May 2025, Claude 4 Opus and Sonnet models are optimized for coding and complex problem-solving. Claude Opus 4, in particular, can autonomously work on tasks for extended periods, showcasing the potential of AI in handling long-horizon software development projects.

Google's Gemini 2.5 Pro: Unveiled at Google I/O 2025, this model introduces "Deep Think" mode, enhancing its reasoning and coding capabilities. Integrated with tools like Android Studio, Gemini can transform UI mockups into functional code, streamlining the development process.

AlphaEvolve by Google DeepMind: This evolutionary coding agent autonomously discovers and refines algorithms, optimizing tasks like data center scheduling and TPU circuit design. It exemplifies AI's capability to innovate beyond human-designed solutions.

The Evolving Role of the Software Engineer in an Agentic World

As agentic AI systems grow in capability and autonomy, the traditional responsibilities of software engineers are undergoing a profound transformation. Rather than diminishing the value of human developers, this shift is redefining their focus—elevating their roles from tactical execution to strategic oversight, architectural design, and creative innovation.

From Code Producer to System Orchestrator

Agentic AI is rapidly absorbing routine and repetitive tasks across the software development lifecycle (SDLC)—from generating boilerplate code and writing unit tests to managing dependency updates and automating integration workflows. By reducing this operational friction, engineers are freed to focus on what truly differentiates human intelligence: conceptual design, critical thinking, and systems-level problem-solving.

The modern engineer is evolving into a system thinker—someone who understands the interplay between user needs, business logic, system performance, and ethical impact. While AI can automate much of the "how," it still relies heavily on humans for defining the "why" and the "what." Tasks like architecting distributed systems, formulating innovative feature sets, and driving user-centered design remain squarely in the domain of human insight and judgment.

Human-in-the-Loop as a Design Principle

A key realization in the current agentic paradigm is that "human-in-the-loop" is not a temporary constraint—it's a fundamental design pattern. Even the most autonomous agents require human engineers for task delegation, interpretability, validation, and course correction. This ensures accountability, upholds quality and security standards, and embeds ethical safeguards into complex workflows.

Rather than replacing engineers, agentic systems are creating a new symbiotic model—where developers act as orchestrators and evaluators, setting high-level intent, monitoring AI decisions, and intervening when nuance, context, or creativity is required. In this model, effective collaboration between humans and agents will be a core competency. Engineers will need to learn not just how to code, but how to converse with agents, design prompts, validate AI-generated artifacts, and guide multi-agent workflows.

A Collaborative Future

Expect to see a shift toward teams augmented by AI counterparts, where the optimal human-to-agent ratio becomes a strategic decision. Engineers will increasingly be evaluated not only on their technical output, but on their ability to guide agentic systems toward meaningful, ethical, and high-impact outcomes.

The future of software engineering is neither fully human-driven nor entirely autonomous—it is collaborative by design. As agentic AI systems grow more capable, they are increasingly shouldering operational and technical burdens across the software development lifecycle. Yet rather than rendering developers obsolete, this shift is elevating their responsibilities to new, high-leverage domains that demand creativity, judgment, and strategic thinking.

Human engineers are no longer just writing code—they're setting intent, defining constraints, guiding architecture, and validating outcomes. The most effective engineers in this new paradigm are those who can work alongside AI agents, providing direction, interpreting results, and ensuring that AI outputs align with broader organizational goals.

Emerging Responsibilities in an Agentic World

Architectural Design:

Engineers are shifting from writing individual functions to designing entire systems. They must ensure that AI-generated components adhere to architectural patterns, interface standards, and long-term scalability requirements.

Business Alignment:

Translating business objectives into actionable technical strategies is a uniquely human function. Engineers will continue to play a key role in framing problems, setting success criteria, and guiding agents to produce value-aligned solutions.

Ethical Oversight:

As AI systems gain autonomy, ethical stewardship becomes essential. Engineers are increasingly responsible for ensuring that systems operate transparently, respect user privacy, mitigate bias, and adhere to regulatory standards.

Strategic Problem-Solving:

With tactical implementation tasks delegated to agents, engineers are empowered to focus on formulating innovative features, improving user experience, and addressing emergent challenges holistically.

Continuous Learning and AI Fluency:

The rapid pace of AI innovation demands that developers stay up-to-date with emerging tools, models, and agent frameworks. Fluency in prompt engineering, agent orchestration, and AI system design is becoming a core skillset.

From Execution to Orchestration

In this emerging model, the software engineer's role is evolving from execution to orchestration. While AI agents can generate code, test suites, and integration pipelines, it’s human engineers who ensure these outputs align with strategic goals and long-term vision.

As organizations explore multi-agent systems and AI-augmented SDLCs, we are entering an era where the developer’s creativity, system-level thinking, and ethical leadership will define success far more than raw coding output. The agentic future doesn’t reduce the engineer’s relevance—it redefines it around leadership, creativity, and system-level thinking.